I had the fortune of starting my career at a signature moment in the computer revolution—the microprocessor had just been invented and it was rapidly being incorporated into the many various tools that leverage our human intelligence. Among those tools are the instruments we use to measure the world around us.

Up until then, instruments that required some amount of interaction used very direct physical interfaces: knobs, buttons, and dials for input; meters, gauges and chart recorders for output. They were wired in complex arrangements but had limitations in how complex their measurement could be.

The microprocessor changed this by providing an inexpensive logic element that could monitor and manage much more complex channels of interaction: switches, keypads, digital displays, sensors, data terminals, printers, transducers and actuators were now on the list. The opportunities to make better measurements than ever before, or measurements that simply could not be performed previously because of their complexity, now became possible. As a result, there was a renaissance in instrumentation.

In the 1970s, it was a marketing tactic to claim that your new product incorporated a microprocessor. Many of us would take credit for having designed the “world’s first microprocessor-based X”, where X could be just about anything (including such things as toasters, having “bread brains”). In my case, I designed the first microprocessor-based engineering seismograph, an instrument that recorded the small acoustic signals detected by geophones when the ground was impacted by a large hammer or a small detonation, for the purpose of discerning what materials lay beneath the surface.

In those days, the microprocessor was embedded within the instrument. It expanded the capabilities of the instrument without increasing the complexity of using it; the instrument was still self-contained. You could power it up, make your measurements, and have the results presented on a display, or printed onto a strip of paper. No additional support devices were required.

Eventually however, instruments would connect to full-functioned computers, at first as a convenience for transferring measurement data to where it would be examined and analyzed, and then later so that the computer could fully control the operation of the instrument. This made automated and remote operation of the instrument possible.

It became apparent that the external computer could also provide the control and management of the instrument itself, directly interacting with its transducers. This created yet another leap in instrument functionality as the “instrument” part of the system concentrated on the basics of the physical measurement and delegate the timing and control to a supervisory computer, which in turn was made highly flexible by the use of general purpose software tools. Ever more sophisticated and/or economical measurements could be made with this integrated arrangement.

But now the instrument was no longer self-contained; it had become dependent on the external computer for its successful operation. The tradeoff for increased capabilities at reduced expense seemed worth it.

I have a friend who shops for used lab equipment who bemoans this development, and I now understand why. I recently acquired a used spectroradiometer that was designed in the 1990s, during the second renaissance of instrument evolution. It was very expensive, perhaps $20,000 at the time, and it took exquisitely sensitive and high-resolution spectra. It was a workhorse instrument for two decades but is now trapped in its relationship with the 1990s era computer to which it is tethered.

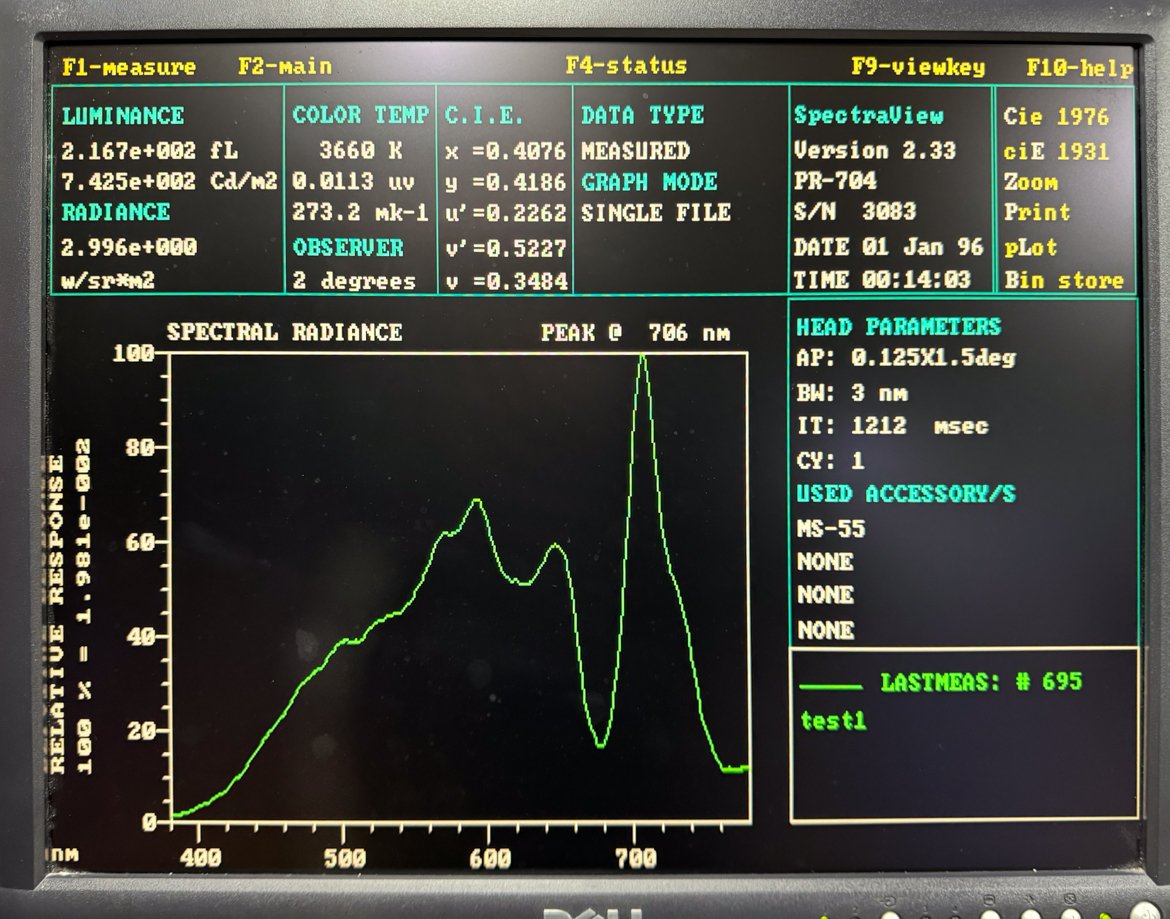

The SpectraScan PR704 is reminiscent of an old VHS video camera in size and shape. An eyepiece shows the view through the lens and identifies a tiny area at the center of the scene whose light will be sent through precision optics to split it into its components. Sensors and electronics report an intensity amplitude for each wavelength across the visible spectrum.

This is the “head” of the instrument. It connects via an umbilical cord to an interface card in an IBM PC, a model that has not been manufactured in this millennium. The operating system is MS-DOS, the command line driven system that launched Microsoft in the 1980s. There is specialized software, provided on 5-1/4” floppy disks, and installed on a 250MB hard drive, that knows how to control the sensor head via its interface card, and to make a spectral measurement. The measurements can be saved to the hard drive, and if you want to export it to somewhere else, it can be copied to a floppy disk.

This arrangement served well for years. The instrument was dedicated to the task of taking spectral measurements in a well-defined manufacturing environment, so the increasing obsolescence of its host computer went unnoticed.

During the time of its measurement isolation, MS-DOS was replaced by variants of Windows-3, Windows-95, 2000, NT, XP, Vista, and all versions since. Operators no longer used the archaic commands of MS-DOS. Personal computers exchanged the 5-1/4” floppy for the 3-1/2” size, then abandoned floppy disks altogether. They became networked and adopted USB interfaces for input/output and storage devices.

The SpectraScan instrument was now orphaned, with no way to get its measurement data to any useful modern computer.

Fortunately, before it was truly too late, its computer was updated to a model that still supported the interface to the measurement head, could emulate MS-DOS, and although it had no floppy drives, it did have a USB port that supported a thumb drive. It was probably the last opportunity to make this update, as subsequent computer models would eliminate support for its interface hardware. The operating system was Windows-ME (“Millenium Edition”), released in 1999.

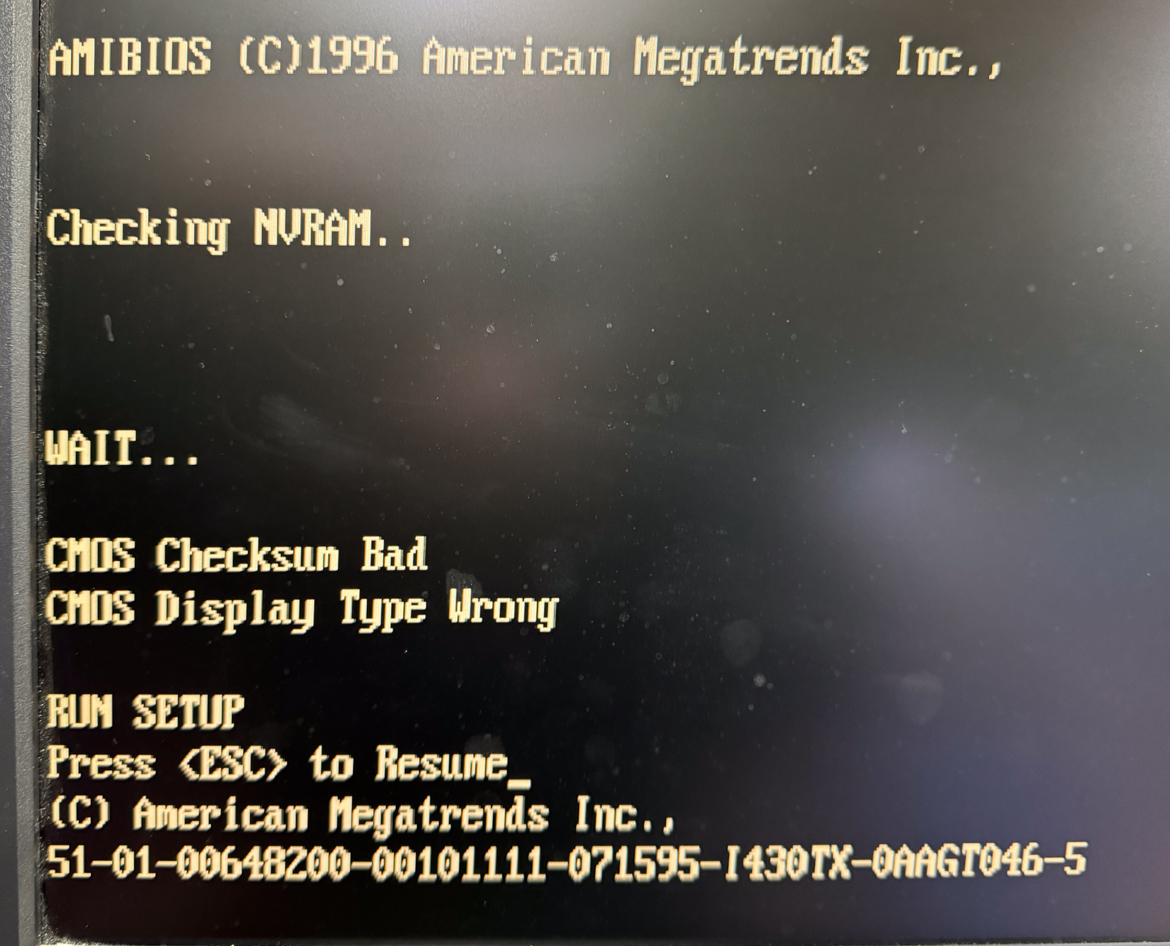

This gave the instrument an extended lease on its life, and today I can still use it to make spectral measurements. Each time I power it up is like a time trip to the last century, and I must remember the DOS commands to set the time, date, and other settings before running the old measurement software. It is only slightly inconvenient that I must use a USB drive to transport the data to a modern computer before I can do any serious analysis and processing. But because the sensor head has such an exquisite sensitivity and resolution, I am more than willing to go through these machinations.

Eventually there will come a time when the old computer fails to boot. I have already seen this happen a few times for unknown reasons. So far, powering down and trying again has brought it back to life. When all resuscitation efforts finally fail however, the valuable head will suddenly and truly have no value. It will have become an abstract collection of highly engineered and exotic components entombed in a package with no way of performing the complex task for which they were designed and assembled.

This is the hidden cost of modern instrumentation.

Have you tried running the software on an emulator like DOSBox?